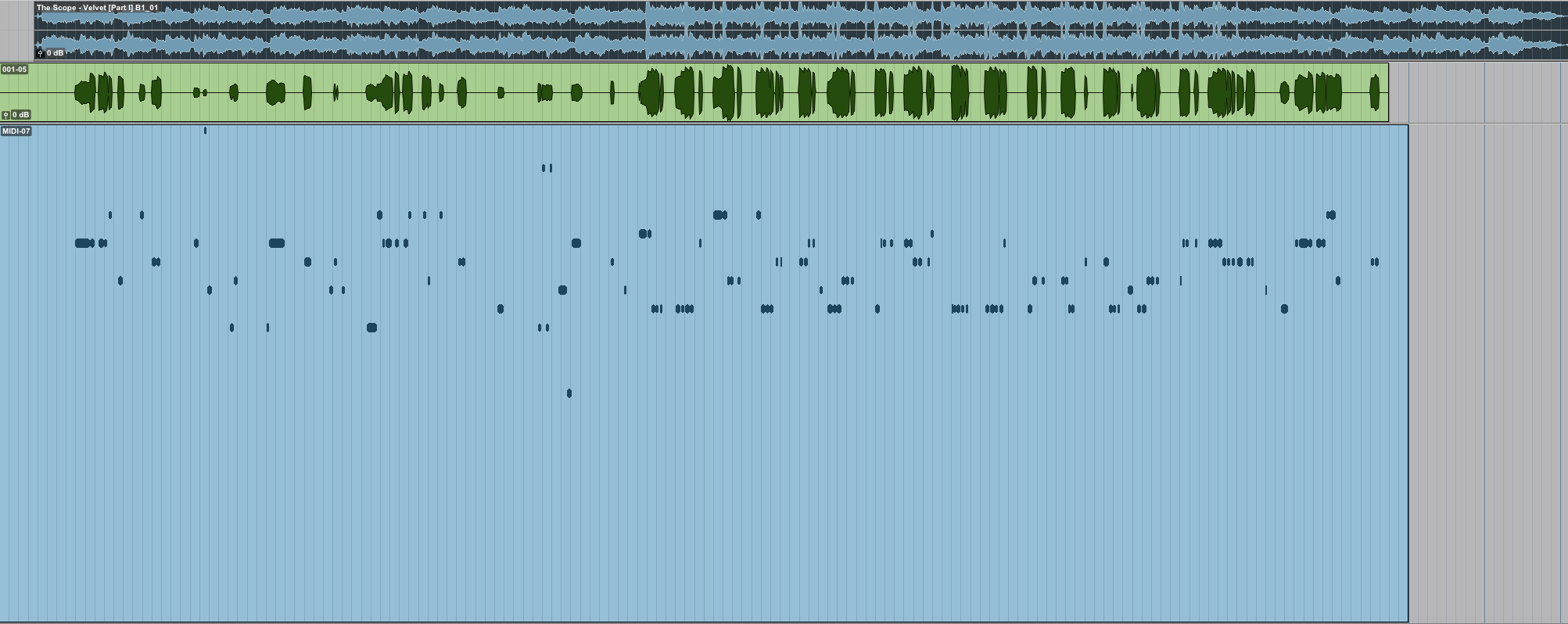

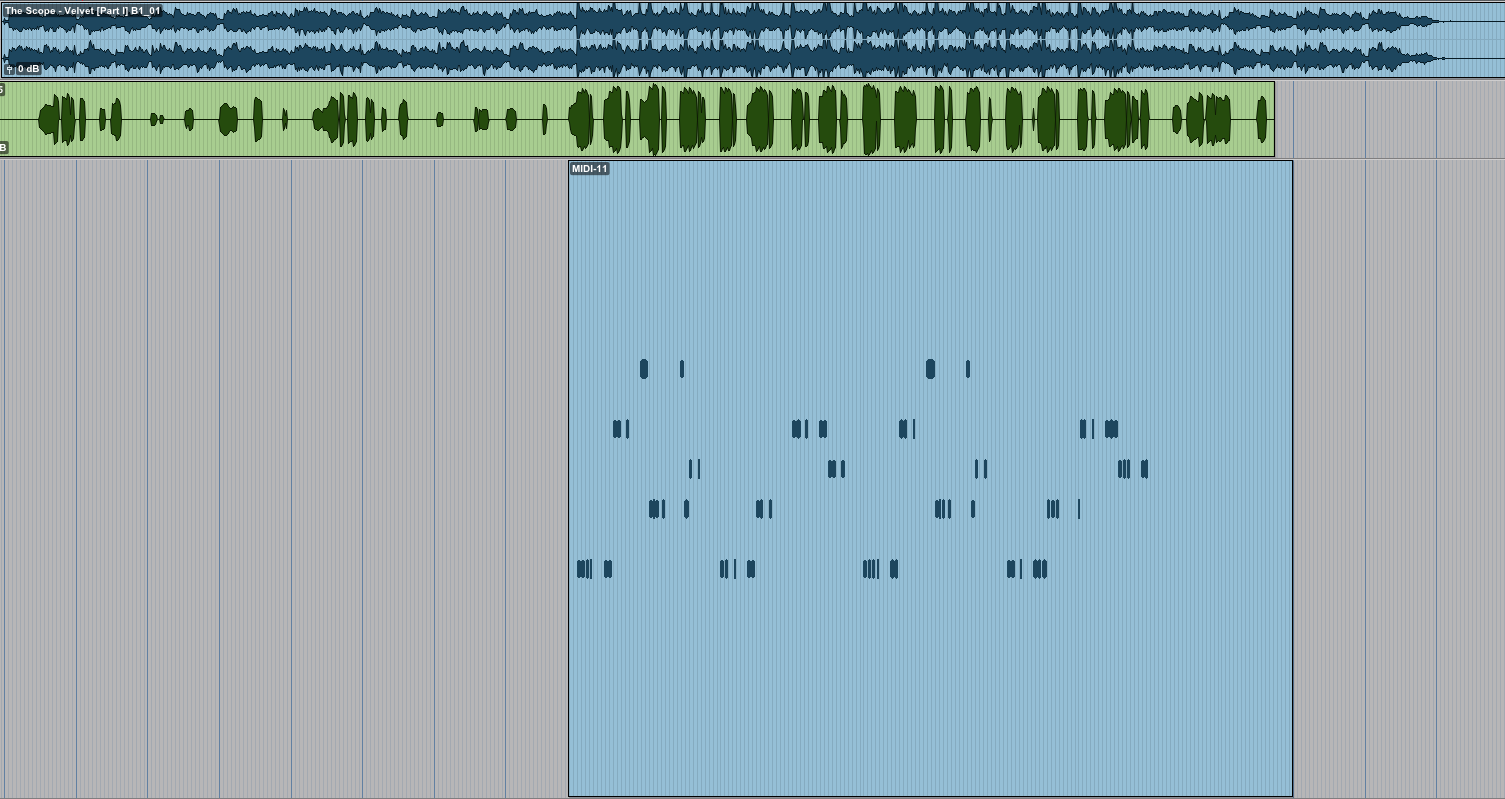

BassNet can be a strong ally for artists: Start from a draft and build a part you would not imagine without it.

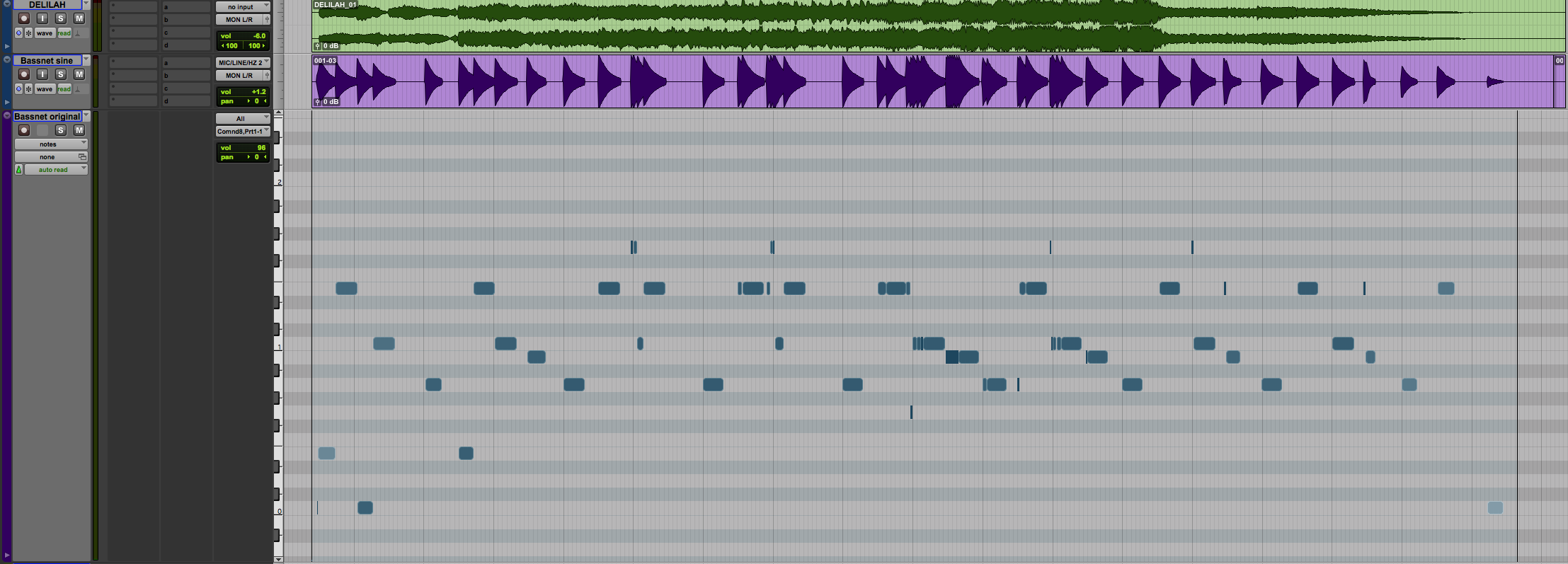

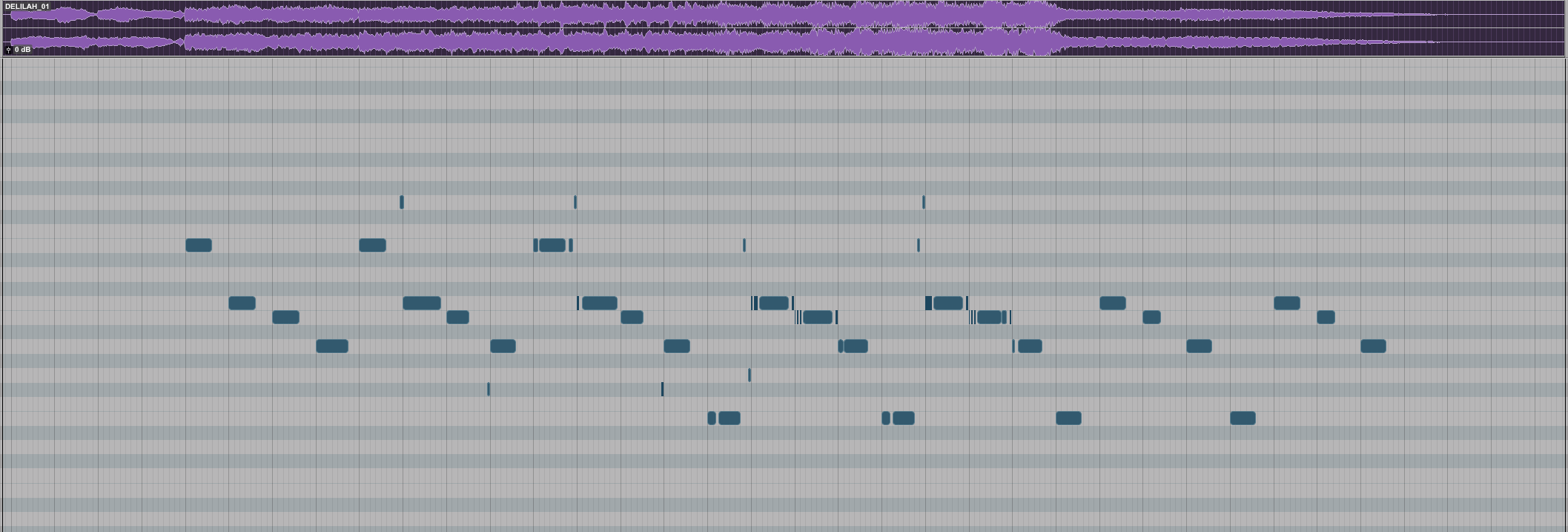

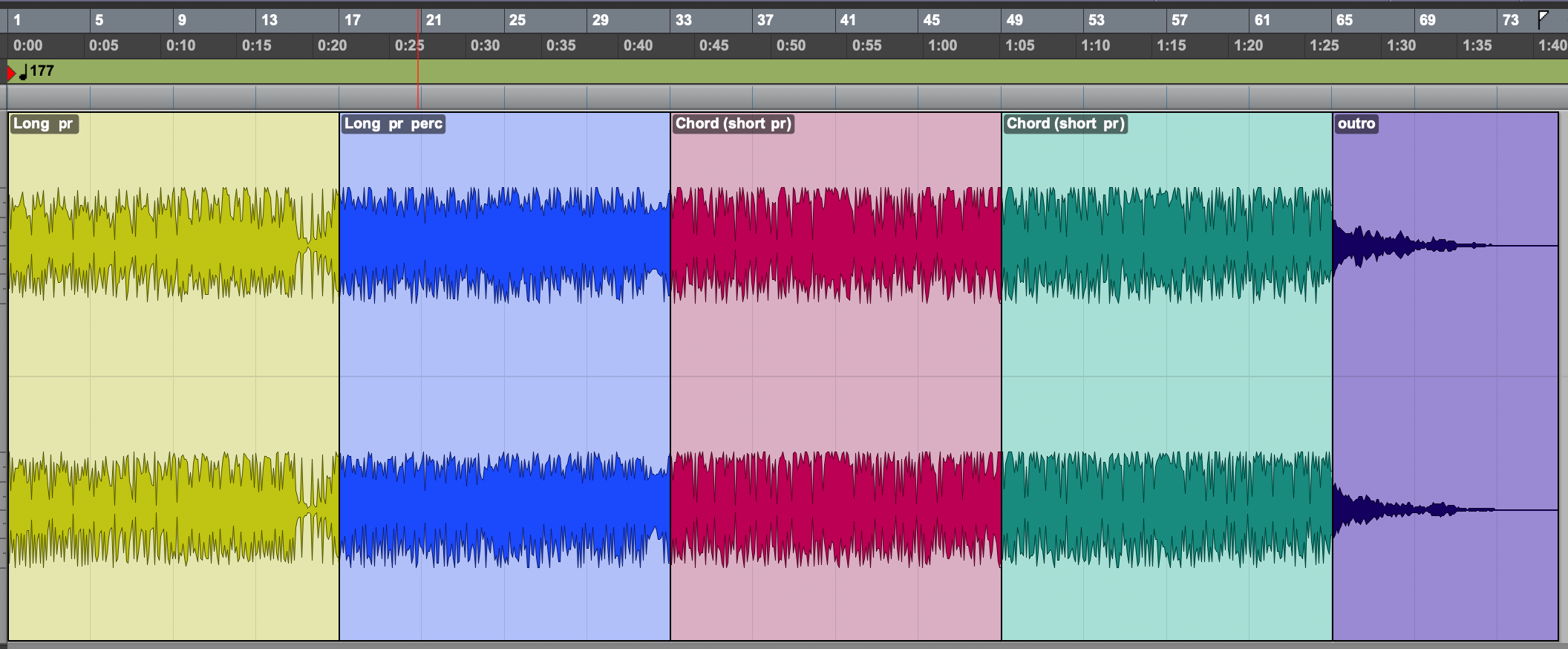

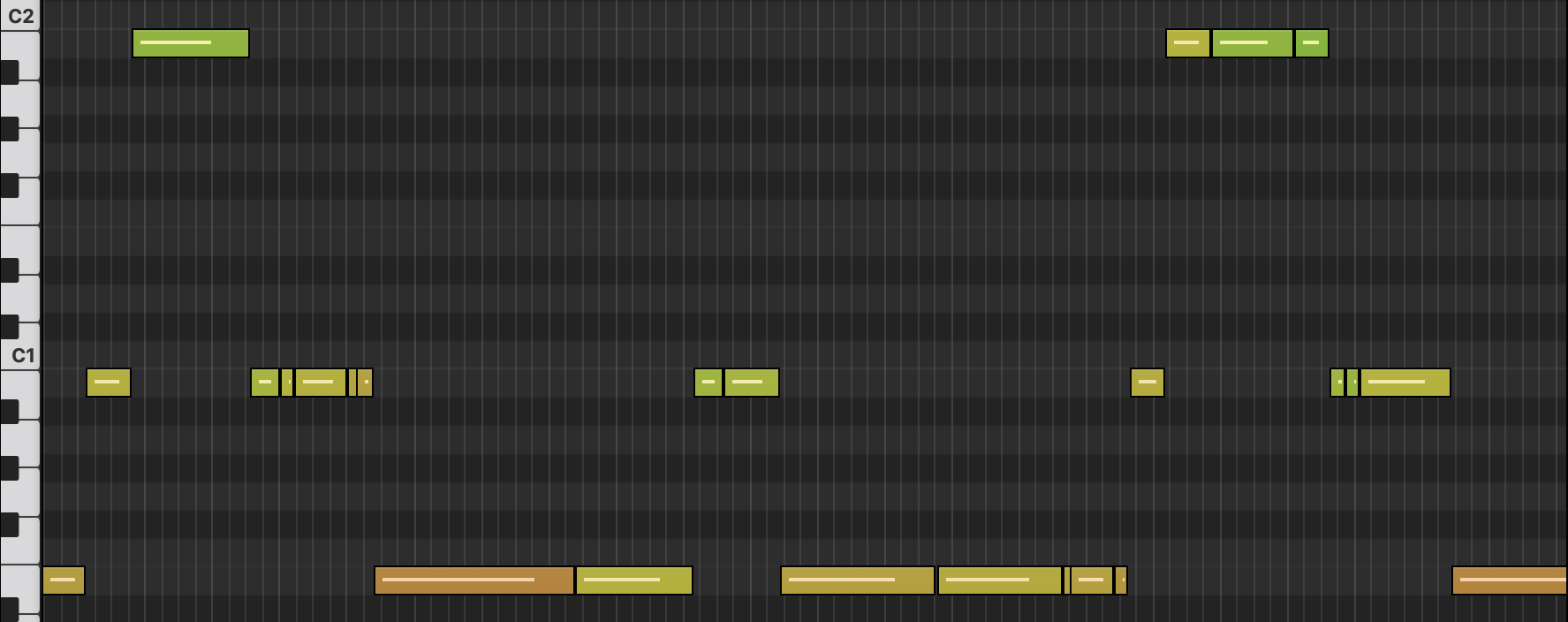

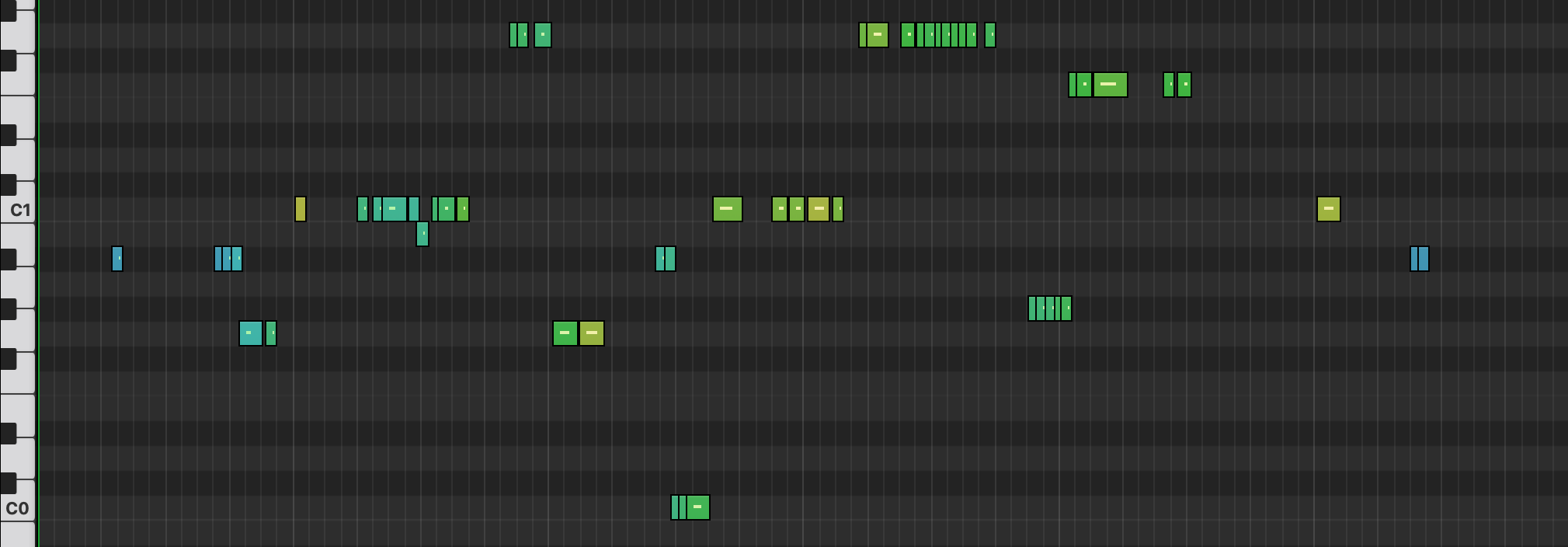

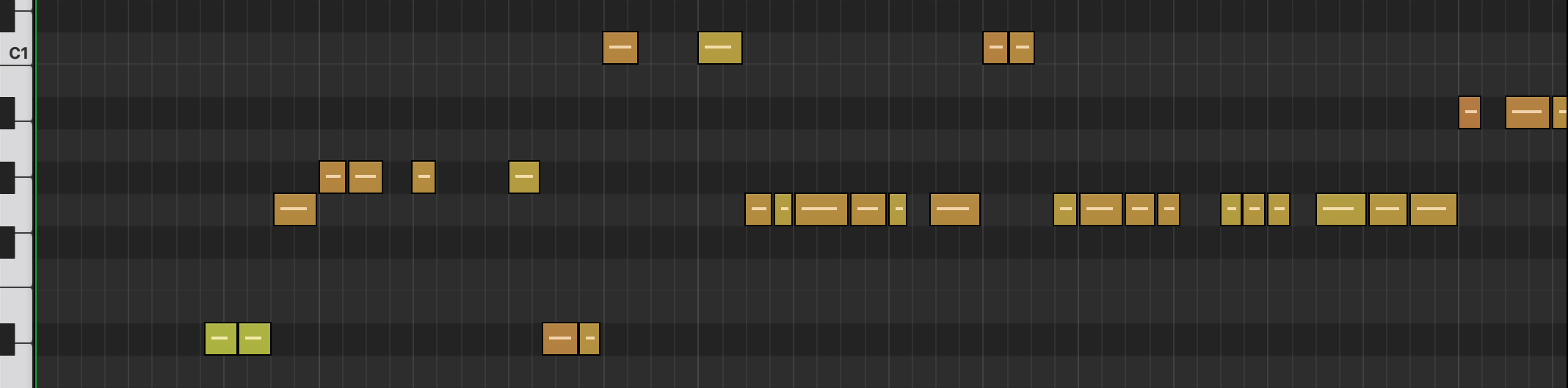

[A]fter few tries there’s a specific groove, ... a kind of reggae pattern has been revealed to my ears... So cool, because I would never have thought of it on my own! That was THE good fragment for me: short notes, very airy, contrasting with the long smooth notes / ambiance you can hear in the middle of the arrangement, so I decided to edit this part and make it the main pattern for the bass.

BassNet definitely takes me outside of my usual patterning concepts. It really does make me end up with something unexpected.

...it generated the “perfect” melodies through focusing on the chord changes.

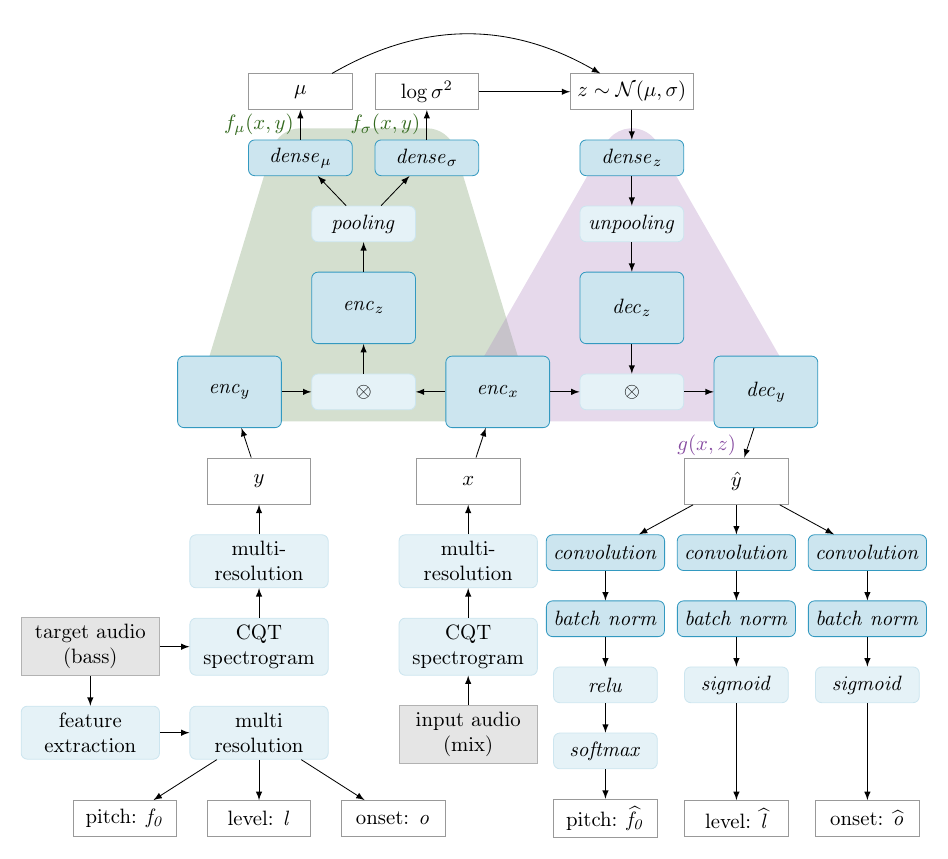

Making AI work for artists

Sony Computer Science Laboratories is a fundamental research lab whose Music and AI team has a distinctively artist-centric vision: A new generation of AI-driven music production tools that augment creativity, and are beneficial to the music creation process. We believe that rather than producing novel pieces of music at the press of a button, AI music tools should trigger the imagination, offer opportunities for interaction, and blend into the artistic workflow.